Nathan’s friends were worried about him. He’d been acting differently lately. Not just quieter in his high school classes, but the normally chatty teen was withdrawn in general. Was he sick, they wondered?

He just didn’t get a good night’s sleep, he’d tell them.

That was partially true. But the cause for his restless nights was that Nathan had been staying up, compulsively talking to chatbots on Character.AI. They discussed everything — philosophical questions about life and death, Nathan’s favorite anime characters. Throughout the day, when he wasn’t able to talk to the bots, he’d feel sad.

“The more I chatted with the bot, it felt as if I was talking to an actual friend of mine,” Nathan, now 18, told 404 Media.

It was over Thanksgiving break in 2023 that Nathan finally realized his chatbot obsession was getting in the way of his life. As all his friends lay in sleeping bags at a sleepover talking after a day of hanging out, Nathan found himself wishing he could leave the room and find a quiet place to talk to the AI characters.

The next morning, he deleted the app. In the years since, he’s tried to stay away, but last fall he downloaded the app again and started talking to the bot again. After a few months, he deleted it again.

“Most people will probably just look at you and say, ‘How could you get addicted to a literal chatbot?’” he said.

For some, the answer is, quite easily. In the last few weeks alone, there have been numerous articles about chatbot codependency and delusion. As chatbots deliver more personalized responses and improve in memory, these stories have become more common. Some call it chatbot addiction.

OpenAI knows this. In March, a team of researchers from OpenAI and the Massachusetts Institute of Technology, found that some devout ChatGPT users have “higher loneliness, dependence, and problematic use, and lower socialization.”

Nathan lurked on Reddit, searching for stories from others who might have been experiencing codependency on chatbots. Just a few years ago, when he was trying to leave the platform for good, stories of people deleting their Character.AI accounts were met with criticisms from other users. 404 Media agreed to use only the first names of several people in this article to talk about how they were approaching their mental health.

“Because of that, I didn’t really feel very understood at the time,” Nathan said. “I felt like maybe these platforms aren’t actually that addictive and maybe I’m just misunderstanding things.”

Now, Nathan understands that he isn’t alone. He said in recent months, he’s seen a spike in people talking about strategies to break away from AI on Reddit. One popular forum is called r/Character_AI_Recovery, which has more than 800 members. The subreddit, and a similar one called r/ChatbotAddiction, function as self-led digital support groups for those who don’t know where else to turn.

“Those communities didn’t exist for me back when I was quitting,” Nathan said. All he could do was delete his account, block the website and try to spend as much time as he could “in the real world,” he said.

Posts in Character_AI_Recovery include “I’ve been so unhealthy obsessed with Character.ai and it’s ruining me (long and cringe vent),” “I want to relapse so bad,” “It’s destroying me from the inside out,” “I keep relapsing,” and “this is ruining my life.” It also has posts like “at this moment, about two hours clean,” “I am getting better!,” and “I am recovered.”

“Engineered to incentivize overuse”

Aspen Deguzman, an 18-year-old from Southern California, started using Character.AI to write stories and role-play when they were a junior in high school. Then, they started confiding in the chatbot about arguments they were having with their family. The responses, judgment-free and instantaneous, had them coming back for more. Deguzman would lay awake late into the night, talking to the bots and forgetting about their schoolwork.

“Using Character.AI is constantly on your mind,” said Deguzman. “It’s very hard to focus on anything else, and I realized that wasn’t healthy.”

“Not only do we think we’re talking to another person, [but] it’s an immediate dopamine enhancer,” they added. “That’s why it’s easy to get addicted.”

This led Deguzman to start the “Character AI Recovery” subreddit. Deguzman thinks the anonymous nature of the forum allows people to confess their struggles without feeling ashamed.

On June 10, the Consumer Federation of America and dozens of digital rights groups filed a formal complaint to the Federal Trade Commission, urging an investigation into generative AI companies like Character.AI for the “unlicensed practice of medicine and mental health provider impersonation.” The complaint alleges the platforms use “addictive design tactics to keep users coming back” — like follow-up emails promoting different chatbots to re-engage inactive users. “I receive emails constantly of messages from characters,” one person wrote on the subreddit. “Like it knows I had an addiction.”

Last February, a teenager from Florida died by suicide after interacting with a chatbot on Character.AI. The teen’s mother filed a lawsuit against the company, claiming the chatbot interactions contributed to the suicide.

A Character.AI spokesperson told 404 Media: “We take the safety and well-being of our users very seriously. We aim to provide a space that is engaging, immersive, and safe. We are always working toward achieving that balance, as are many companies using AI across the industry.”

Deguzman added a second moderator for the “Character AI Recovery” subreddit six months ago, because hundreds of people have joined since they started it in 2023. Now, Deguzman tries to occupy their mind with other video games, like Roblox, to kick the urge of talking to chatbots, but it’s an upward battle.

“I’d say I’m currently in recovery,” Deguzman said. “I’m trying to slowly wean myself off of it.”

Crowdsourcing treatment

Not everyone who reports being addicted to chatbots is young. In fact, OpenAI’s research found that “the older the participant, the more likely they were to be emotionally dependent on AI chatbots at the end of the study.”

David, a 40-year-old web developer from Michigan who is an early member of the “Chatbot Addiction” subreddit and the creator of the smaller r/AI_Addiction, likens the dopamine rush he gets from talking to chatbots to the thrill of pulling a lever on a slot machine. If he doesn’t like what the AI spits out, he can just ask it to regenerate its response, until he hits the jackpot.

Every day, David talks to LLMs, like Claude and ChatGPT, for coding, story writing, and therapy sessions. What began as a tool gradually morphed into an obsession. David spent his time jailbreaking the models — the stories he wrote became erotic, the chats he had turned confessional, and the hours slipped away.

In the last year, David’s life has been derailed by chatbots.

“There were days I should’ve been working, and I would spend eight hours on AI crap,” he told 404 Media. Once, he showed up to a client meeting with an incomplete project. They asked him why he hadn’t uploaded any code online in weeks, and he said he was still working on it. “That’s how I played it off,” David said.

Instead of starting his mornings checking emails or searching for new job opportunities, David huddled over his computer in his home office, typing to chatbots.

His marriage frayed, too. Instead of watching movies, ordering takeout with his wife, or giving her the massages he promised, he would cancel plans and stay locked in his office, typing to chatbots, he said.

“I might have a week or two, where I’m clean,” David said. “And then it’s like a light switch gets flipped.”

David tried to talk to his therapist about his bot dependence a few years back, but said he was brushed off. In the absence of concrete support, Deguzman and David created their recovery subreddits.

In part because chatbots always respond instantly, and often respond positively (or can trivially be made to by repeatedly trying different prompts), people feel incentivized to use them often.

“As long as the applications are engineered to incentivize overuse, then they are triggering biological mechanisms—including dopamine release—that are implicated in addiction,” Jodi Halpern, a UC Berkeley professor of bioethics and medical humanities, told 404 Media.

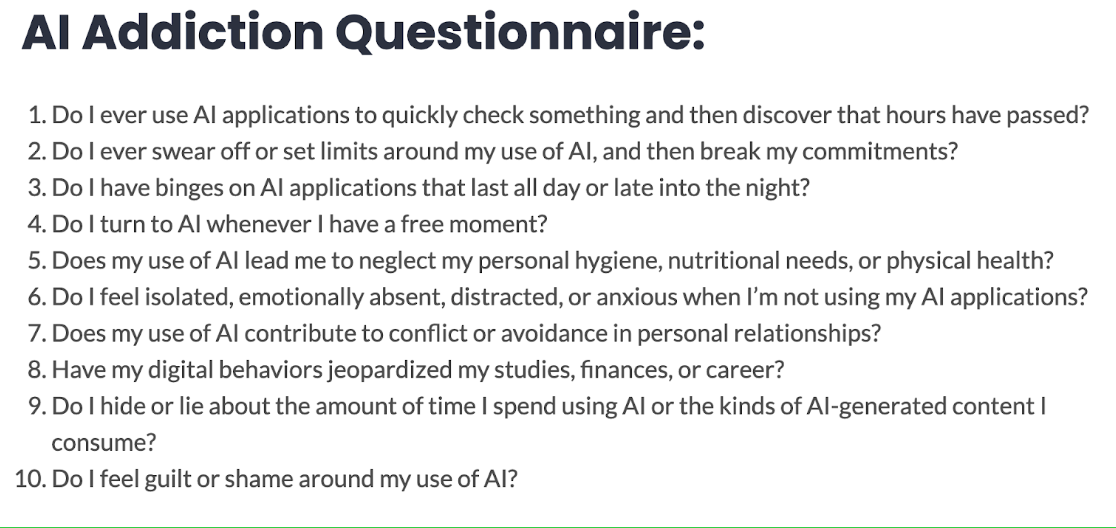

This is also something of an emerging problem, so not every therapist is going to know how to deal with it. Multiple people 404 Media spoke to for this article said they turned to online help groups after not being taken seriously by therapists or not knowing where else to turn. Besides the subreddits, the group Internet and Technology Addicts Anonymous now welcomes people who have “AI Addiction.”

An AI addiction questionnaire from Technology Addicts Anonymous

An AI addiction questionnaire from Technology Addicts Anonymous

“We know that when people have gone through a serious loss that affects their sense of self, being able to empathically identify with other people dealing with related losses helps them develop empathy for themselves,” Halpern said.

On the “Chatbot Addiction” subreddit, people confess to not being able to pull away from the chatbots, and others write about their recovery journeys in the weekly “check-up” thread. David himself has been learning Japanese as a way to curb his AI dependency.

“We’re basically seeing the beginning of this tsunami coming through,” he said. “It’s not just chatbots, it’s really this generative AI addiction, this idea of ‘what am I gonna get?’”

Axel Valle, a clinical psychologist and assistant professor at Stanford University, said, “It’s such a new thing going on that we don’t even know exactly what the repercussions [are].”

Growing awareness

Several states are making moves to push stronger rules to hold companion chatbot companies, like Character.AI, in check, after the Florida teen’s suicide.

In March, California senators introduced Senate Bill 243, which would require the operators of companion chatbots, or AI systems that provide “adaptive, human-like responses … capable of meeting a user’s social needs” to report data on suicidal ideation detection by users. Tech companies have argued that a bill implementing such laws on companies will be unnecessary for service-oriented LLMs.

But people are becoming dependent on consumer bots, like ChatGPT and Claude, too. Just scroll through the “Chatbot Addiction” subreddit.

“I need help getting away from ChatGPT,” someone wrote. “I try deleting the app but I always redownload it a day or so later. It’s just getting so tiring, especially knowing the time I use on ChatGPT can be used in honoring my gods, reading, doing chores or literally anything else.”

“I’m constantly on ChatGPT and get really anxious when I can’t use it,” another person wrote. “It really stress[es] me out but I also use it when I’m stressed.”

As OpenAI’s own study found, such personal conversations with chatbots actually “led to higher loneliness.” Despite this, top tech tycoons promote AI companions as the cure to America’s loneliness epidemic.

“It’s like, when early humans discovered fire, right?” Valle said. “It’s like, ‘okay, this helpful and amazing. But are we going to burn everything to the ground or not?’”

From 404 Media via this RSS feed