New edition of AI Killed My Job, focusing on how AI’s fucked the copywriting industry.

he/they

New edition of AI Killed My Job, focusing on how AI’s fucked the copywriting industry.

Remember the flood of offensive Pixaresque slop that happened in 2023? We’re gonna see something similar thanks to this deal for sure.

But this is the military, so it’s not like details matter or lives are on the line if you’re out in the field and get a sheet of instructions that are completely detached from reality.

Its not like the damage to morale this will near-certainly cause will have some knock-on effects, either.

EDIT: “Soldier dissatisfaction” didn’t accurately describe what this plan will do - changed it to “damage to morale”.

Found a new and lengthy sneer on Bluesky, mocking the promptfondlers’ gullibility.

How can it be called open source when they cannot legally share the sources with you because they’re stolen?

Easy, the people calling it that believe exploiting people and stealing their work is okay

Heartbreaking news today.

In a major setback for right-to-repair, iFixit has jumped on the slop bandwagon, introducing an “AI repair helper” to their website that steals “the knowledge base of over 20 years of repair experts” (to quote their dogshit announcement on YouTube) and uses it to hallucinate “repair guides” and “step-by-step instructions” for its users.

Its the most obvious explanation for their behaviour I can think of.

Perhaps, this is hell.

We’re preparing your kid for the glorious AI future. That is, a life of getting nickel-and-dimed by corporate parasites feeding off public money.

And being utterly dependent on said corporate parasites thanks to the deskilling machine utterly destroying their critical thinking and general mental acuity at such a young age.

Doing a quick search, it hasn’t been posted here until now - thanks for dropping it.

In a similar vein, there’s a guide to recognising AI-extruded music on Newgrounds, written by two of the site’s Audio Moderators. This has been posted here before, but having every “slop tell guide” in one place is more convenient.

Kovid Goyal, the primary dev of ebook management tool Calibre, has spat in the face of its users by forcing AI “features” into it.

the article headline: “Chatbots are now rivaling social networks as a core layer of internet infrastructure”

Counterpoint: “vibe coding” is rotting internet infrastructure from the inside, AI scrapers are destroying the commons through large-scale theft, chatbots are drowning everything else through nonstop lying

I’m a bit more familiar with Case - I mainly knew her for making web games like :the game: trilogy, Nothing to Hide and We Become What We Behold.

Fucking sucks to see she’s bought into this shit.

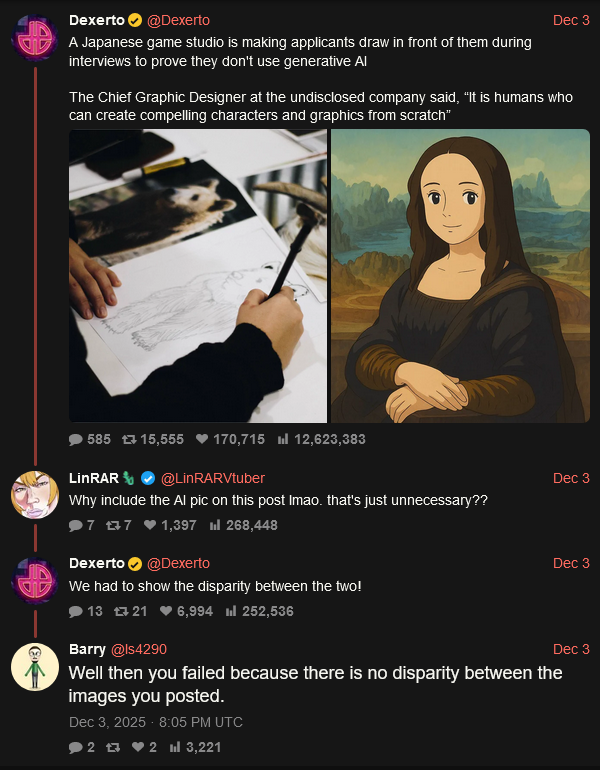

Dexerto has reported on an unnamed Japanese game studio weeding out promptfondlers (by having applicants draw something in-person during interviews).

Unsurprisingly, the replies have become a promptfondler shooting gallery. Personal “favourite” goes to the guy who casually admits he can’t tell art from slop:

That is a pretty solid point - constant updates are something online systems need on a regular basis.

AFAIK FOSS has avoided being slopified by AI, so we should hopefully be pretty far from that point

To (somewhat) reiterate a potentially extreme position of mine, shit like this is why society needs to actively avoid adopting new software for the time being, if not actively cut back on software usage whenever possible.

Whilst the IT industry was already a failure-ridden mess which actively refuses to learn before AI was a thing, the rise of AI and “vibe coding” has made things so much worse in practically every regard. At this point, any new software should be treated as a liability until proven otherwise.

Major RAM/SSD manufacturer Micron just shut down its Crucial brand to sell shovels in the AI gold rush, worsening an already-serious RAM shortage for consumer parts.

Just another way people are paying more for less, thanks to AI.

They are trusting a “tool” that categorically cannot be trusted. They are fools to trust it.

New and lengthy sneer from Current Affairs just dropped: AI is Destroying the University and Learning Itself

Rereading’s also got a blog up now