…dumb me was translating the comics by hand, without realising that the original artist had an English version of them.

Lvxferre

The catarrhine who invented a perpetual motion machine, by dreaming at night and devouring its own dreams through the day.

- 20 Posts

- 1.44K Comments

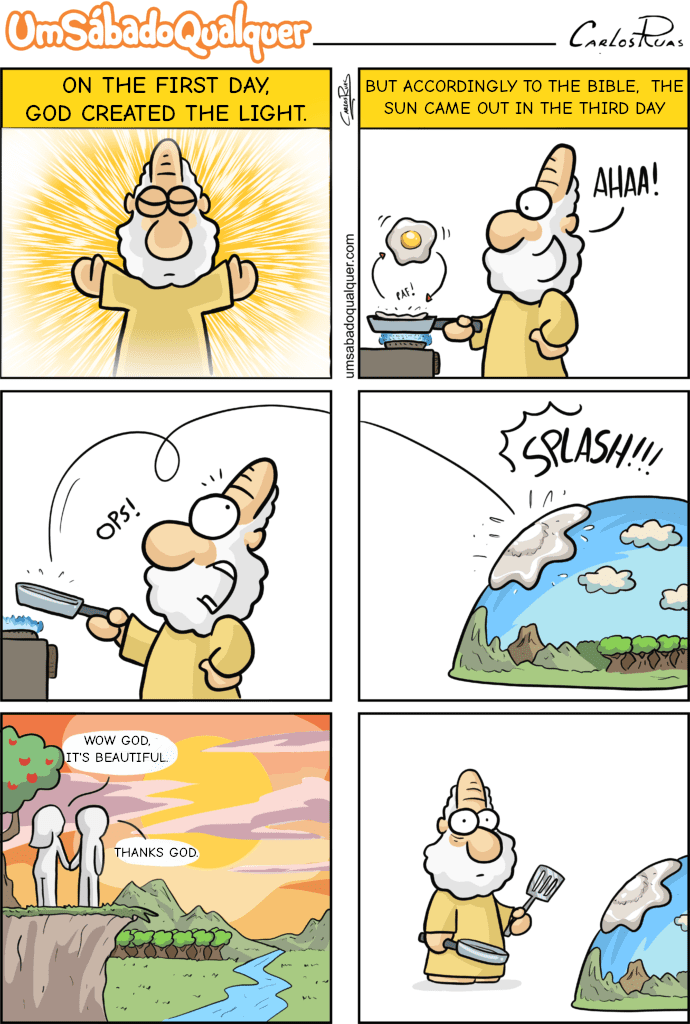

The joke is that Einstein calls God a genius, for planning all this stuff, when God didn’t plan anything - he did it on a whim. It relies partially on the characterisation, as across Um Sábado Qualquer’s comics, God is often represented as petty, clueless and/or dumb. Like this:

6·7 hours ago

6·7 hours agoI’ve seen programmers claiming that it helps them out, too. Mostly to give you an idea on how to tackle a problem, instead of copypasting the solution (as it’ll likely not work).

My main use of the system is

- Probing vocab to find the right word in a given context.

- Fancy conjugation/declension table.

- Spell-proofing.

It works better than going to Wiktionary all the time, or staring my work until I happen to find some misspelling (like German das vs. dass, since both are legit words spellcheckers don’t pick it up).

One thing to watch out for is that the translation will be more often than not tone-deaf, so you’re better off not wasting your time with longer strings unless you’re fine with something really sloppy, or you can provide it more context. The later however takes effort.

763·20 hours ago

763·20 hours agoLarge “language” models decreased my workload for translation. There’s a catch though: I choose when to use it, instead of being required to use it even when it doesn’t make sense and/or where I know that the output will be shitty.

And, if my guess is correct, those 77% are caused by overexcited decision takers in corporations trying to shove AI down every single step of the production.

7·1 day ago

7·1 day agoIf the description provided by the article is accurate, and it remains so in the near future, that won’t be an issue for DDG users. All that DDG would need to do is to pull the results out of the side panel, instead of the central space.

2·1 day ago

2·1 day agoDitto. It isn’t just a matter of watching Paradox to get rekt (although the idea gives me warm feelings), but also that the engine is… bad. As in, we’re in 2024 and it has codification issues, pathfinding is awful, and I’m almost certain that part of the EU4 fort woes are caused by the devs working around engine limitations.

16·1 day ago

16·1 day ago[Wester, Paradox’ CEO] “amid the well-deserved self-criticism, it is worth reminding ourselves that we have solid footing, because the foundation of our business is doing well.”

No, Wester, you don’t have solid footing. Stop fooling yourselves.

The solid footing for a game developer is the players’ trust, something that Paradox has been using as toilet paper since 2016~2018 or so. At this rate you’re only waiting for other companies to release grand strategy games - or even tweak their 4X games into GSG - and you’re done, since you’re still living off the CK/EU/Vicky series.

102·1 day ago

102·1 day agoI know you are, but the argument that an LLM doesn’t understand context is incorrect

Emphasis mine. I am talking about the textual output. I am not talking about context.

It’s not human level understanding

Additionally, your obnoxiously insistent comparison between LLMs and human beings boils down to a red herring.

Not wasting my time further with you.

[For others who might be reading this: sorry for the blatantly rude tone but I got little to no patience towards people who distort what others say, like the one above.]

4·1 day ago

4·1 day ago4chan-in-a-bottle? That would be fun.

111·1 day ago

111·1 day agoThe issue with your assertion is that people don’t actually work a similar way.

I’m talking about LLMs, not about people.

18·2 days ago

18·2 days agoUpvoted as it’s unpopular even if I heavily disagree with it.

Look at the big picture.

There’s a high chance that whatever is causing that flamewar - be it a specific topic, or user conflicts, or whatever - will pop up again. And again. And again.

You might enjoy watching two muppets shitposting at each other. Frankly? I do it too. (Sometimes I’m even one of the muppets.) However this gets old really fast and, even if you’re emotionally detached of the whole thing, it reaches a point where you roll your eyes and say “fuck, yet another flamewar. I just want to discuss the topic dammit”.

Plenty people however do not; and once you allow flamewars, you’re basically telling them “if you don’t want to see this, fuck off”. Some of those will be people who are emotionally invested enough in your community to actually contribute with it, unlike the passing troll stirring trouble.

1·2 days ago

1·2 days agoI suppose it’s improper to point and laugh? // I see no reason to respond to bad faith arguments.

It’s improper, sure, but I do worse. You seriously don’t want proselytise Christian babble in my ear if I’m in a bad mood. It sounds like this:

[Christian] “God exists because you can’t disprove him”

[Me] “Yeah, just like you can’t disprove that your mum got syphilis from sharing a cactus dildo with Hitler. Now excuse me it’s Sunday morning and I want to sleep.”

13·2 days ago

13·2 days agoYour “ackshyually” is missing the point.

93·2 days ago

93·2 days agoIt doesn’t need to be filtered into human / AI content. It needs to be filtered into good (true) / bad (false) content. Or a “truth score” for each.

That isn’t enough because the model isn’t able to reason.

I’ll give you an example. Suppose that you feed the model with both sentences:

- Cats have fur.

- Birds have feathers.

Both sentences are true. And based on vocabulary of both, the model can output the following sentences:

- Cats have feathers.

- Birds have fur.

Both are false but the model doesn’t “know” it. All that it knows is that “have” is allowed to go after both “cats” and “birds”, and that both “feathers” and “fur” are allowed to go after “have”.

403·2 days ago

403·2 days agoModel degeneration is an already well-known phenomenon. The article already explains well what’s going on so I won’t go into details, but note how this happens because the model does not understand what it is outputting - it’s looking for patterns, not for the meaning conveyed by said patterns.

Frankly at this rate might as well go with a neuro-symbolic approach.

11·2 days ago

11·2 days agoEven back when I used Reddit, I had such a burning hate against Reddit results that I blacklisted them. So this is actually improving things for me, as I use DDG by default.

As such I hope that this decision becomes another nail in each of their (Google and Reddit’s) coffins.

8·2 days ago

8·2 days agoAn algorithm, among the many other things they ruin, is causing a rubbish article in RPS.

131·3 days ago

131·3 days agoIMO it’s more useful to learn how to identify and reply to fallacies and bad premises in general, than to focus on the ones that Christian proselytism uses.

For example, the ones in the video are:

- “Either god created us, or we are here by random chance” - false dichotomy + strawman

- “God exists because you can’t disprove him” - inversion of the burden of the proof

- “Objective morality proves god exists” - naturalistic fallacy + bad premise

- “Everything that exists was created. Therefore god exists” - bad premise

- “You’re not educated enough” - ad hominem

Others that you need to look for are:

- invincible authority (a type of appeal to authority) - X was said by authority, thus X is true. Christians love this crap.

- fallacy fallacy - X is backed up by a fallacy, so X is false

- ad populum - lots of suckers believe it, so it’s true

6·3 days ago

6·3 days agoIMO Wube (Factorio’s devs) is a lot like ConcernedApe, when it comes to not violate the players’ trust. That’s why for example Factorio never goes on sales - because the people there believe that it would be disrespectful to charge a larger price to some than others, simply because the others delayed buying it. (Cough Paradox Interactive aka Hipsters’ EA cough cough)

They also have the decency to offer you a demo so you can make an informed decision before buying it, in a clear contrast with certain companies that expect you to buy it blind.

About the DLC: I’m one who typically pirates games, mind you, but I’m probably buying it, just like I did mit the base game. The base game isn’t incomplete or anything like that; fuck, people compare it with crack for a reason - it’s functional, polished, and fun to the point of addictiveness. And the FFF (devlogs) clearly show enough content to be worth it.

Note that, even if we refer to Java, Python, Rust etc. by the same word “language” as we refer to Mandarin, English, Spanish etc., they’re apples and oranges - one set is unlike the other, even if both have some similarities.

That’s relevant here, for two major reasons:

Regarding the first point, I’ll give you an example. You suggested abstract syntax trees for the internal representation of programming code, right? That might work really well for programming, dunno, but for human languages I bet that it would be worse than the current approach. That’s because, for human languages, what matters the most are the semantic and pragmatic layers, and those are a mess - with the meaning of each word in a given utterance being dictated by the other words there.