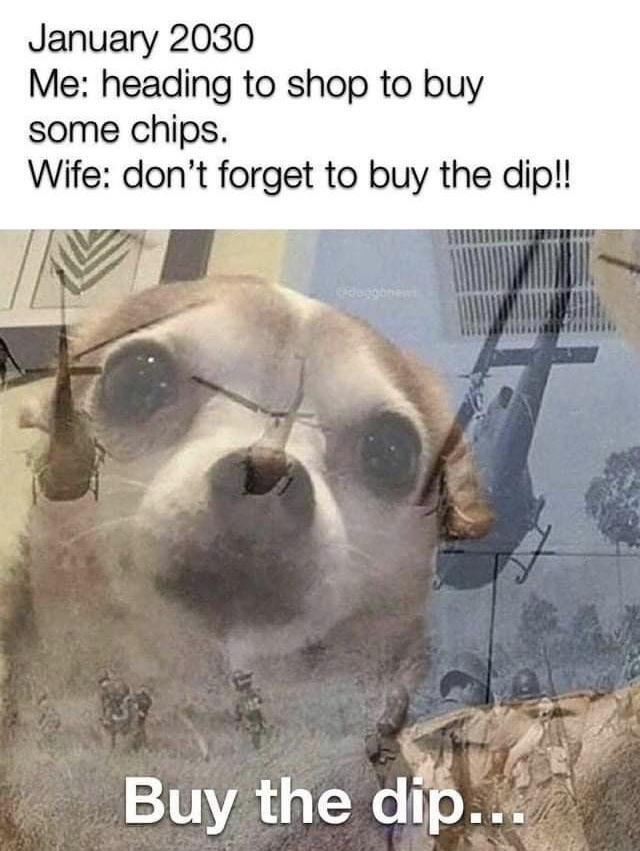

AI CEOs be like

Online Communism 😃

Real Life Communism 😠

Communism for fascists and fascism for the commons. It’s the american way.

More like theft of data = “communism”

I remember when copying data wasn’t theft, and the entire internet gave the IP holders shit for the horrible copyright laws…

I work as an AI engineer and any company that’s has a legal department that isn’t braindead isn’t stealing content. The vast majority of content posted online is done so under a creative commons licence, which allows other people to do basically whatever they want with that content, so it’s in no way shape or form stealing.

Yeah I know, but the press picks some rare cases and says, that this goes for all.

You know how it is.

It really depends on what the site’s terms of service/usage agreement says about the content posted on the site.

For example a site like Art Station has users agree to a usage license that lets them host and transmit the images in their portfolios. However there is no language saying the visitors are also granted these rights. So you may be able to collect the site text for fair use, but the art itself requires reaching out for anything other than personal/educational use.

Well, theft of the labor of contributors to give to non contributors is communism … So, your statement is true, it’s just more broad than that.

If its published online its not theft Thats like saying that if i publish a book and someone uses it to learn a language then they are stealing my book

Ok then picture this: A webscrapper is copying code that has copyright that indicates, that it is forbidden to modify this code, to publish it under a different name or to sell this code (for example a method that calculates the inverse square root really fast).

By using the code as training data, most language models may actually paste this code or write it with little change, because most language models are based not on writing something that has a purpose that is given by the user, like for example AI’s that are supposed to evaluate pictures of dogs and cats and is supposed to decide which is which, but they are based on the following schematic:

-

Read previous text

-

Predict, what letter will follow

-

Repeat until user interferes.

Because language models work this way, if I would for example only train it on the novsl “Alice in Wonderland”, then there is a high possibly, that the model will reproduce parts of it.

But there is a way to fix this problem: If we broaden the training data very much, the chance the output would be considered plagiarism will narrow down.

Upon closer inspection there is another problem though, because AI (at this point in time), don’t have an influence from outside in a sense like humans do: A human is experiencing every day of their life with there being a chance of something happening, that modifies their brain structure through emotion, like for example a chronic depression. This influences the output of a person not only in their symptoms, but also in the way they would write text for example.

The consequence is that the artist may use this emotional change to express it with their art.

Every day influences the artist differently and inspires them with unseen and new thoughts.

The AI (today) has the problem that they definitely retell the stories it has heard again and again like Aristotle (?) says.

The outer influence is missing to the AI at this point in time.

If you want to have a model that can give you things, that never have been written before or don’t even seem like anything that there has ever been, you have to give it these outer influences.

And there is a big problem coming up, because, yes this process could be implemented by training the AI even further after it already was launched, by reinforced learning, but this process would still need data input from humans, which is really annoying.

A way to make it easier would be to give an AI a device on which it can run and sensors as well as output devices, so that it can learn from its sensors and use this information in its post-training training phase to gather more data and make current events, that it perceives, relevant.

As you can see, if we would do that, then we would have an AI that could do anything, and learn from anything, which both makes it really really fucking dangerous, but also really really fucking interesting.

lol that’s funny my machine learning course used Alice in wonderland as an example for us to train our projects on

That wasn’t a coincidence, I watched a video some time ago, where they used tensor flow to do exactly that.

(I’m doing my CS bachelors btw)

Oh OK nice! Good luck!!

-

Online communism unless you actually want something for free.

Intellectual property is fake lmao. Train your AI on whatever you want

I agree, but only if it goes both ways. We should be allowed to use big corpo’s IPs however we want.

You are allowed to use copyrighted content for training. I recommend reading this article by Kit Walsh, a senior staff attorney at the EFF if you haven’t already. The EFF is a digital rights group who most recently won a historic case: border guards now need a warrant to search your phone.

I know. AI is capable of recreating many ideas it sees in the training data even if it doesn’t recreate the exact images. For example, if you ask for Mario, you get Mario. Even if you can’t use these images of Mario without committing copyright infringement, AI companies are allowed to sell you access to the AI and those images, thereby monetizing them. What I am saying is that if AI companies can do that, we should be allowed to use our own depictions of Mario that aren’t AI generated however we want.

AI companies can sell you Mario pics, but you can’t make a Mario fan game without hearing from Nintendo’s lawyers. I think you should be allowed to.

The comparision doesn’t work. Because the AI is replacing the pencil or other drawing tool. And we aren’t saying pencil companies are selling you Mario pics because you can draw a Mario picture with a pencil either. Just because the process of how the drawing is made differs, doesn’t change the concept behind it.

An AI tool that advertises Mario pcitures would break copyright/trademark laws and hear from Nintendo quickly.

Except that you interact with the “tool” in pretty much the same way you’d interact with a human that you’re commissioning for art minus, a few pleasantries. A pencil doesn’t know how to draw Mario.

AI tools implicitly advertise Mario pictures because you know that:

- The AI was trained on lots of images, including Mario.

- The AI can give you pictures of stuff it was trained on.

An animation studio commissioned to make a cartoon about Mario would still get in trouble, even if they had never explicitly advertised the ability to draw Mario.

I don’t think how you interact with a tool matters. Typing what you want, drawing it yourself, or clicking through options is all the same. There are even other programs that allow you to draw by typing. They are way more difficult but again, I don’t think the difficulty matters.

There are other tools that allow you to recreate copyrighted material fairly easily. Character creators being on the top of the list. Games like Sims are well known for having tons of Sims that are characters from copyrighted IP. Everyone can recreate Barbie or any Disney Princess in the Sims. Heck, you can even download pre made characters on the official mod site. Yet we aren’t calling out the Sims for selling these characters. Because it doesn’t make sense.

Just so we’re clear, my position is that it should all be okay. Copyright infringement by copying ideas is a bullshit capitalist social construct.

I don’t buy the pencil comparison. If I have a painting in my basement that has a distinctive style, but has never been digitized and trained upon, I’d wager you wouldn’t be able to recreate neither that image nor it’s style. What gives? Because AI is not a pencil but more like a data mixer you throw complete works in into and it spews out colllages. Maybe collages of very finely shredded pieces, to the point you could even tell, but pieces of original works nontheless. If you put any non-free works in it, they definitely contaminate the output, and so the act of putting them in in the first place should be a copyright violation in itself. The same as if I were to show you the image in question and you decided to recreate it, I can sue you and I will win.

That is a fundamental misunderstanding of how AI works. It does not shred the art and recreate things with the pieces. It doesn’t even store the art in the algorithm. One of the biggest methods right now is basically taking an image of purely random pixels. You show it a piece of art with a whole lot of tags attached. It then semi-randomly changes pixel colors until it matches the training image. That set of instructions is associated with the tags, and the two are combined into a series of tiny weights that the randomizer uses. Then the next image modifies the weights. Then the next, then the next. It’s all just teeny tiny modifications to random number generation. Even if you trained an AI on only a single image, it would be almost impossible for it to produce it again perfectly because each generation starts with a truly (as truly as a computer can get, an unweighted) random image of pixels. Even if you force fed it the same starting image of noise that it trained on, it is still only weighting random numbers and still probably won’t create the original art, though it may be more or less undistinguishable at a glance.

AI is just another tool. Like many digital art tools before it, it has been maligned from the start. But the truth is what it produces is the issue, not how. Stealing others’ art by manually reproducing it or using AI is just as bad. Using art you’re familiar with to inspire your own creation, or using an AI trained on known art to make your own creation, should be fine.

As a side note because it wasn’t too clear from your writing, but the weights are only tweaked a tiny tiny bit by each training image. Unless the trainer sees the same image a shitload of times (Mona Lisa, that one stock photo used to show off phone cases, etc) then the image can’t be recreated by the AI at all. Elements of the image that are shared with lots of other images (shading style, poses, Mario’s general character design, etc) could, but you’re never getting that one original image or even any particular identifiable element from it out of the AI. The AI learns concepts and how they interact because the amount of influence it takes from each individual image and its caption is so incredibly tiny but it trains on hundreds of millions of images and captions. The goal of the AI image generation is to be able to create vast variety of images directed by prompts, and generating lots of images which directly resemble anything in the training set is undesirable, and in the field it’s called over-fitting.

Anyways, the end result is that AI isn’t photo-bashing, it’s more like concept-bashing. And lots of methods exist now to better control the outputs, from ControlNet, to fine-tuning on a smaller set of images, to Dalle-3 which can follow complex natural language prompts better than older methods.

Regardless, lots of people find that training generative AI using a mass of otherwise copyrighted data (images, fan fiction, news articles, ebooks, what have you) without prior consent just really icky.

I agree.

The problem is that you might technically be allowed to, but that doesn’t mean you have the funds to fight every court case from someone insisting that you can’t or shouldn’t be allowed to. There are some very deep pockets on both sides of this.

Let them come.

I like your moxy, soldier

What a tough guy you are, based AI artist fighting off all the artcucks like a boss 😎

That’s not it going both ways. You shouldn’t be allowed to use anyone’s IP against the copyright holders wishes. Regardless of size.

Nah all information should be freely available to as many people as practically possible. Information is the most important part of being human. All copyright is inherently immoral.

I’d agree with you if people didn’t need to earn money to live. You can’t enact communism by destroying the supporters of it. I fully support communism in theory, we should strive for a community based government. I’m also a game developer and when I make things I need to be able to pay my bills because we still live in capitalism.

If copyright was vigorously enforced a lot more people would starve than would be fed

It’s already vigorously enforced. Maybe you can expand on what you mean.

Go on etsy search mickey mouse and go report all the hundreds of artists whose whole career is violating the copyright of the most litigious company on the planet.

As long as I’m not pretending to be Nintendo, can you quantify how exactly releasing, for example, a fan Mario game is unethical? It having the potential to hurt their sales if you make a better game than them doesn’t count because otherwise that would imply that out-competing anyone in any market must be unethical, which is absurd.

No, it’s about if you make a game that’s worse or off-brand. If you make a bunch of Mario games into horror games and then everyone thinks of horror when they think of Mario then good or bad, you’ve ruined their branding and image. Equally, if you make a bunch of trash and people see Mario as just a trash franchise (like how most people see Sonic games) then it ruins Nintendo’s ability to capitalize on their own work.

No one is worried about a fan-made Mario game being better.

Wouldn’t that only be a problem if you pretended to be Nintendo? There are a lot of fan Sonic games and I don’t think it’s affected Sonic’s image very much. There’s shit like Sonic.EXE, which is absolutely a horror game, but people still don’t think of horror when they think of Sonic. The reason Sonic is a trash franchise is because of SEGA consistently releasing shitty Sonic games. Hell, some of the fan games actually do beat out the official games in quality and polish.

Hypothetically, assuming you’re right and fan games actually do hurt their branding, there’s still no reason for it to be illegal. We don’t ban shitty mobile games for giving good mobile games a bad rep, and neither Nintendo nor mobile devs can claim libel or defamation.

Actually we do. If your game is so terrible, it can get removed from the Google Play store. It has to be really bad and they rarely do it. Steam does this as well. Epic avoids this by vetting the games they put on their platform site first. It’s why the term asset flip exists.

Ban in a legal sense, not ban from a proprietary store.

“Artists don’t deserve to profit off their own work” is stupid as shit. Complain about copyright abuse and lobbying a la Disney and I’ll be right there with you, but people shouldn’t have the right to take your work and profit off it without either your consent or paying you for it.

Artists and other creatives who actually do work to create art (not shitting out text into an image generator) should take every priority over AI “creators.”

No you don’t understand, the machine works exactly like a human brain! That makes stealing the work of others completely justifiable and not even really theft!

/s, bc apparently this community has a bunch of dumbass tech bros that genuinely think this

This but mostly unironically. And before you go Inzulting me I’m an artist myself and wouldn’t be where I am if I wasn’t allowed to learn from other people’s art to teach myself.

don’t dismiss me, I like being spit on

Having a masters degree in machine learning doesn’t make you an artist grow tf up xD

No, but drawing for last 13 years of my life does.

And this, is a strawman. If this argument is being made, it’s most likely because of their own misunderstanding of the subject. They are most likely trying to make the argument that the way biological neural networks and artificial neural networks ‘learn’ is similar. Which is true to a certain extent since one is derived from the other. There’s a legitimate argument to be made that this inherently provides transformation, and it’s exceptionally easy to see that in most unguided prompts.

I haven’t seen your version of this argument being spoken around here at all. In fact it feels like a personal interpretation of someone who did not understand what someone else was trying to communicate to them. A shame to imply that’s an argument people are regularly making.

Equating training AI to not being able to profit is stupid as shit and the same bullshit argument big companies use to say “we lost a bazillion dollars to people pursuing out software” someone training their AI on an art work (that is probably under a creative commons licence anyway) does suck money out of an artists pocket they would have otherwise made.

Artists and other creatives who actually do work to create art (not shitting out text into an image generator) should take every priority over AI “creators.”

Why are you the one that gets to decide what is “work” to create art? Should digital artists not count because they are computer assisted, don’t require as much skill and technique as “traditional” artists and use tools that are based on the work of others like, say, brush makers?

And the language you use shows that you’re vindictive and angry.

Should digital artists not count because they are computer assisted, don’t require as much skill and technique as “traditional” artists and use tools that are based on the work of others like, say, brush makers?

My brother in Christ, they didn’t even allude to this, this is an entirely new thought.

Yeah no shit sherlok. I’m applying their flawed logic to other situations, where the conclusion is even more dumb so he can see that the logic doesn’t work.

But leaping from ‘copyright is made-up’ to ‘so artists should starve?’ is totally reasonable discourse.

Explain what you think will happen if artists who depend on their art to make a living lose copyright protection.

Commissions, patronage, subscriptions, everything else rando digital artists do when any idiot can post a JPG everywhere and DMCA takedowns accomplish roughly dick.

Meanwhile - you wanna talk about people who’ve been fucked over by corporations that decide their original artwork is too close to something a dead guy made?

Or look into whether professional artists were having a good time, before all this? Intellectual property laws have funded and then effectively destroyed countless years of effort by artists who aren’t even allowed to talk about it due to NDAs. CGI firms keep losing everything and going under while the movies they worked on make billions. The status quo is not all sunshine and rainbows. Pretending the choice is money versus nothing is deeply dishonest.

“Just internet beg” oh okay. Shows exactly how much you value the people making the art you want to feed into the instant gratification machine.

They said IP, IP protects artists from having their work stolen. The fact AI guzzlers are big mad that IP might apply to them too is irrelevant.

Digital artists do exactly as much work as traditional artists, comparing it to AI “art” from an AI “artist” is asinine. Do you actually think digital artists just type shit in and a 3D model appears or something?

And yeah I’m angry when my friends and family who make their living as actual artists, digital and traditional, have their work stolen or used without their permission. They aren’t fucking corporations making up numbers about lost sales, they’re spending weeks trying to get straight up stolen art mass printed on tshirts and mugs removed from online sale. They’re going outside and seeing their art on shit they’ve never sold. Almost none of them own a home or even make enough to not have a regular job, it’s literally taking money out of their pockets to steal their work. This is the shit you’re endorsing by shitting on the idea of IP.

Do you actually think artists using AI tools just type shit into the input and output decent art? It’s still just a new, stronger digital tool. Many previous tools have been demonized, claiming they trivialize the work and people who used them were called hacks and lazy. Over time they get normalized.

And as far as training data being considered stealing IP, I don’t buy it. I don’t think anyone who’s actually looked into what the training process is and understands it properly would either. For IP concerns, the output should be the only meaningful measure. It’s just as shitty to copy art manually as it is to copy it with AI. Just because an AI used an art piece in training doesn’t mean it infringed until someone tries to use it to copy it. Which, agreed, is a super shitty thing to do. But again, it’s a tool, how it’s used is more important than how it’s made.

Lmao, I’ve used AI image generation, you’re not going to be able to convince me any skill was involved in what I made. The fact some people type a lot more and keep throwing shit at the wall to see what sticks doesn’t make it art or anything they’ve done with their own skill. The fact none of them can control what they’re making every time the sauce updates is proof of that.

If it’s so obviously not IP violating to train with it then I’m sure it’ll be totally fine if they train them without using artists’ work without permission, since it totally wasn’t relying on those IP violating images. Yet for some reason they fight this tooth and nail. 🤔

Except they totally could. But a data source of such size of material where everyone opted in to use for AI explicitly does not exist. The reason they fight it is in part also because training such models isn’t exactly free. The hardware that it’s done on costs hundreds of thousands of dollars and must run for long periods. You would not just do that for the funsies unless you have to. And considering the data by all means seems to be collected in legal ways, they have cause to fight such cases.

It’s a bit weird to use that as an argument to begin with since a party that knows they are at fault usually settles rather than fight on and incur more costs. It’s almost as if they don’t agree with your assertion that they needed permission, and that those imagines were IP violating 🤔

Oh no it costs money to use the art stealing machine to make uncopyrightable trash? 🥺

"But a data source of such size of material where everyone opted in to use for AI explicitly does not exist. "

Dang I wonder why 🤔🤔🤔🤔

First of all, your second point is very sad to hear, but also a non-factor. You are aware people stole artwork before the advent of AI right? This has always been a problem with capitalism. It’s very hard to get accountability unless you are some big shot with easy access to a lawyer at your disposal. It’s always been shafting artists and those who do a lot of hard work.

I agree that artists deserve better and should gain more protections, but the unfortunate truth is that the wrong kind of response to AI could shaft them even more. Lets say inspiration could in some cases be ruled to be copyright infringement if the source of the inspiration could be reasonably traced back to another work. This could allow companies big companies like Disney an easier pathway to sue people for copyright infringement, after all your mind is forever tainted in their IP after you’ve watched a single Disney movie. Banning open source models from existing could also create a situation where the same big companies could create internal AI models from the art in their possession, but anyone with not enough materials could not. Which would mean that everyone but the people already taking advantage of artists will benefit from the existence of the technology.

I get that you want to speak up for your friends and family, and perhaps they do different work than I imagine, but do you actually talk to them about what they do in their work? Because digital artist also use non-AI algorithms to generate meshes and images. (And yes, that could be summed down to ‘type shit in and a 3D model appears’) They also use building blocks, prefabs, and use reference assets to create new unique assets. And like all artists they do take (sometimes direct) inspiration from the ideas of others, as does the rest of humanity. Some of the digital artists I know have embraced the technology and combined it with the rest of their skills to create new works more efficiently and reduce their workload. Either by being able to produce more, or being able to spend more time refining works. It’s just a tool that has made their life easier.

All of that is completely irrelevant to the fact that image generators ARE NOT PEOPLE and the way that people are inspired by other works has absolutely fuck all to do with how these algorithms generate images. Ideas aren’t copyrightable but these algorithms don’t use ideas because they don’t think, they use images that they very often do not have a legal right to use. The idea that they are equivalent is a self serving lie from the people who want to drive up hype about this and sell you a subscription.

I watch my husband work every day as a professional artist and I can tell you he doesn’t use AI, nor do any of the artists I know; they universally hate it because they can tell exactly how and why the shit it makes is hideous. They spot generated images I can’t because they’re used to seeing how this stuff is made. The only thing remotely close to an algorithm that they use are tools like stroke smoothing, which itself is so far from image generation it would be an outright lie to equate them.

Companies aren’t using this technology to ease artist workloads, they’re using it to replace them. There’s a reason Hollywood fought the strike as hard as they did.

The fact they are not people does not mean they can’t use the same legal justifications that humans use. The law can’t think ahead. The justification is rather simple, the output is transformative. Humans are allowed to be inspired by other works because the ideas that make up such a concept can’t be copyrighted because they can be applied to be transformative. If the human uses that idea to produce something that’s not transformative, it’s also infringement. AI currently falls into that same reasoning.

You call it a self serving lie, but I could easily say that about your arguments as well that you only have this opinion because you don’t like AI (it seems). That’s not constructive, and since I hope you care about artists as well, I implore you actually engage in good faith debate other than just assuming the other person must be lying because they don’t share your opinion. You are also forgetting that the people that benefit from image generators are people. They are artists too. Most from before AI was a thing, and some because it became a thing.

Again, sorry to hear your husband feels that way. I feel he is doing himself a disservice to dismiss a new technology, as history has not been kind to those who oppose inevitable change, especially when there are no good non-emotional reasons against this new technology. Most companies have never cared about artists, that fact was true without AI, and that fact will remain true whatever happens. But if they replace their artists with AI they are fools, because the technology isn’t that great on it’s own (currently). You need human intervention to use AI to make high quality output, it’s a tool, not the entire process.

The Hollywood strikes is a good example of what artists should be doing rather than making certain false claims online. Join a union, get protection for your craft. Just because something is legal doesn’t mean you can’t fight for your right to demand specific protections from your employer. But they do not affect laws, they are organizational. It has no ramifications on people not part of the guilds involved. If a company which while protecting their artists, allows them to use AI to accelerate their workflow, and comes out on top against the company that despite their best intentions, made their art department not as profitable anymore, that will also cause them to lose their jobs. Since AI is very likely not to go away completely even in the most optimistic of scenarios, it’s eventually a worse situation than before.

And lastly, I guess your husband does different work than the digital artists I work with then. You have a ton of generation tools for meshes and textures. I also never equated it directly to AI, but you stated that they use no tools which do all the work for them (such as building a mesh for them), which is false. You wouldn’t use the direct output of AI as well. I implore you to look at “algorithmic art”: https://en.wikipedia.org/wiki/Algorithmic_art

“I can” and “you can’t” are not opposites.

I have no idea what you’re trying to convey here.

“Artists don’t deserve to profit off their own work” is not what anyone said.

Even if people can just take your shit and profit off it - so can you.

This is not a complete rebuttal, but if you need the core fallacy spelled out, let’s go slowly.

Why are you entitled to profit off the labor of someone else?

Why am I not entitled to profit from my own work building on stories I enjoy?

Isn’t this a completely different conversation than the one we were having and kind of missing the point? Yes, imo you should be allowed to do that. Still, AI Companies are using the labor of millions of artist for free to train their AIs, which are then threatening to eliminate ways of these artist to gather income.

How is that related in any way to the ways that copyright has been exploited against fanmade art?

Even if people can just take your shit and profit off it - so can you.

What entitles someone to take another person’s work and profit off it?

Fuck your sophistry.

No. Fuck that. I don’t consent to my art or face being used to train AI. This is not about intellectual property, I feel my privacy violated and my efforts shat on.

Unless you have been locked in a sensory deprivation tank for your whole life, and have independently developed the English language, you too have learned from other people’s content.

Well my knowledge can’t be used as a tool of surveillance by the government and the corporations and I have my own feelings intent and everything in between. AI is artifical inteligence, Ai is not an artificial person. AI doesn’t have thoughts, feelings or ideals. AI is a tool, an empty shell that is used to justify stealing data and survelience.

This very comment is a resource that government and corporations can use for surveillance and training.

AI doesn’t have thoughts? We don’t even know what a thought is.

We may not know what comprises A thought, but I think we know it’s not matrix math. Which is basically all an LLM is

Hard disagree, the neural connections in the brain can be modeled with matrix math as well. Sure some people will be uncomfortable with that notion, especially if they believe in spiritual stuff outside physical reality. But if you’re the type that thinks consciousness is an emergent phenomenon from our underlying biology then matrix math is a reasonable approach to representing states of our mind and what we call thoughts.

Shut the fuck up tech bro xD

Yet we live in a world where people will profit from the work and creativity of others without paying any of it back to the creator. Creating something is work, and we don’t live in a post-scarcity communist utopia. The issue is the “little guy” always getting fucked over in a system that’s pay-to-play.

Donating effort to the greater good of society is commendable, but people also deserve to be compensated for their work. Devaluing the labor of small creators is scummy.

I’m working on a tabletop setting inspired by the media I consumed. If I choose to sell it, I’ll be damned if I’m going to pay royalties to the publishers of every piece of media that inspired me.

If you were a robot that never needed to eat or sleep and could generate 10,000 tabletop RPGs an hour with little to no creative input then I might be worried about whether or not those media creators were compensated.

The efficiency something can be created with should have no bearing on whether someone gets paid royalties.

It absolutely should, especially when the “creator” is not a person. AI is not “inspired” by training data, and any comparisons to human artists being inspired by things they are exposed to are made out of ignorance of both the artistic process and how AI generates images and text.

When, not if, such a robot exists, do you imagine we’ll pay everyone who’d ever published a roleplaying game? Like a basic income exclusively for people who did art before The Training?

Or should the people who want things that couldn’t exist without magic content engines be denied, to protect some prior business model? Bear in mind this is the literal Luddite position. Weavers smashed looms. Centuries later, how much fabric in your life do you take for granted, thanks to that machinery?

‘We have to stop this labor-saving technology because of capitalism’ is a boneheaded way to deal with any conflict between labor-saving technology… and capitalism.

I know this is shocking to people who have never picked up a brush or written anything other than internet arguments after the state stopped mandating they do so when they graduated high school, but you can just create shit without AI. Literal children can do it.

And there is no such thing as something that “couldn’t exist” without the content stealing algorithm. There is nothing it can create that humans can’t, but humans can create things it can’t.

There’s also something hilarious about the idea that the real drag on the artistic process was the creating art part. God almighty, I’d rather be a Luddite than a Philistine.

Those 10000 tabletop RPGs will almost certainly be completely worthless on their own, but might contain some novel ideas. Until a human comes by and scours it for ideas and combines it. It could very well be that in the same time it could only create 1 coherent tabletop RPG idea.

Should be mentioned though, AIs don’t run for free either, they cost quite a lot of electricity and require expensive hardware.

what a shitty argument. AI isn’t people

Then don’t post your art or face publicly, I agree with you if it’s obtained through malicious ways, but if you post it publicly than expect it to be used publicly

If you post your art publicly why should it be legal for Amazon to take it and sell it? You are deluding yourself if you believe AI having a get out of jail free card on IP infringement won’t be just one more source of exploitation for corporations.

Taking it and selling it is obviously not legal, but taking it and using it for training data is a whole different thing.

Once a model has been trained the original data isn’t used for shit, the output the model generates isn’t your artwork anymore it isn’t really anybody’s.

Sure, with some careful prompts you can get the model to output something in your style fairly closely, but the outputting image isn’t yours. It’s whatever the model conjured up based on the prompt in your style. The resulting image is still original of the model

It’s akin to someone downloading your art, tracing it over and over again till they learn the style and then going off to draw non-traced original art just in your style

“You don’t understand, it’s not infringement because we put it in a blender first” is why AI “art” keeps taking Ls in court.

A court saying “nobody owns this” is closer to the blender argument than the ripoff argument.

Care to point to those Ls in court? Because as far as I’m aware they are mostly still ongoing and no legal consensus has been established.

It’s also a bit disingenuous to boil his argument down to what you did, as that’s obviously not what he said. You would most likely agree that a human would produce transformative works even when basing most of their knowledge on that of another (such as tracing).

Ideas are not copyrightable, and neither are styles. You could use that understanding of another’s work to create forgeries of their work, but hence why there exist protections against forgeries. But just making something in the style of another does not constitute infringement.

EDIT:

This was pretty much the only update on a currently running lawsuit I could find: https://www.reuters.com/legal/litigation/us-judge-finds-flaws-artists-lawsuit-against-ai-companies-2023-07-19/

“U.S. District Judge William Orrick said during a hearing in San Francisco on Wednesday that he was inclined to dismiss most of a lawsuit brought by a group of artists against generative artificial intelligence companies, though he would allow them to file a new complaint.”

"The judge also said the artists were unlikely to succeed on their claim that images generated by the systems based on text prompts using their names violated their copyrights.

“I don’t think the claim regarding output images is plausible at the moment, because there’s no substantial similarity” between images created by the artists and the AI systems, Orrick said."

Hard to call that an L, so I’m eagerly awaiting them.

Biggest L: that shit ain’t copyrightable. A doodle I fart out on a sticky pad has more legal protection.

Go fuck yourself AI bitch :3

If the large corporations can use IP to crush artists, artists might as well try to milk every cent they can from their labor. I dislike IP laws as well, and you can never use the masters’ tools to dismantle their house, but you can sure as shit do damage and get money for yourself.

Luckily, AI aren’t the master’s tools, they’re a public technology. That’s why they’re already trying their had at regulatory capture. Just like they’re trying to destroy encryption. Support open source development, It’s our only chance. Their AI will never work for us. John Carmack put it best.

AI algorithms aren’t the masters tools in the sense that anyone can set up a model using free code on cheap tech, but they do require money to improve and produce quality products. The training data requires labor of artists to produce, the computers the model runs on require labor to make, and the electricity that allows the model to continuously improve requires, you guessed it, labor. Labor will always, and should always, be expensive, making AI most useful to those with money to spend.

IP is only one tool in the masters’ tool box, but capitalism is their box, and the other forms of capital can be used to swing the public’s tools far harder than a wage laborer.

Hence why it’s important that open source models should keep the support of the public. If they have to close shop, what remains will be those made by tech giants. They will be censored, they will be neutered, except if you pay enough for them. The power should remain with the artists, and not those with the money.

Here is an alternative Piped link(s):

They have the plant, but we have the power

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Based take.

Me, literally training a neutral net to generate pictures of carrot cakes right now:

WHERE DID YOU GET THE DATA?

Best not be including my carrot erotica in your training data.

I feel the current AI crawling bots + “opt-out your data” tactic is ingeniously evil.

It’s hilarious really

Companies have been stealing data for so long, and then another company comes and steals their data by scraping it they go surprised Pikachu

The best part is the end result, not where the data comes from. Tired of hearing about AI models “stealing” data. I put all my art, designs and code online and assume it’ll be used to train models (which I’ll be able to use later on)

You’re fine with a corporation making money off your copyrighted work? Without seeing a cent of it?

Why not? Same as a person being inspired to reuse certain aspects. Artists reuse other artists work constantly and usually more blatantly than what AI does.

I wouldn’t want to copyright every visual pattern conceivable, everything would be a copyright violation of some sort.

Tell me you know nothing about how machine learning works without saying it

I have a masters degree in AI and have worked with it in commercial applicstion for last 7 years and there’s nothing wrong with what he said.

People who parrot this dumbass comment usually know nothing about the subject matter

It is how it works…

What? That’s literally how it works, a neural network makes connections based on patterns it sees across all of the samples it has. This is how it works for biological neurons and it’s how it works for artificial neurons. Are you stealing everyone else’s rightful property when you draw a character in an anime artstyle? Because that entirely came from neural connections formed from you viewing other peoples’ art. What you created is not “original”, it is formed entirely based off of patterns you obtained from other things and mixed together. With the added bonus of you having an actual stored memory of other peoples’ art that your brain used to form those connections, so you tend to make it even more similar to already existing art than you realize.

I actually do work with AI but whatever, if you have something specific you’d like to argue go for it. Otherwise let’s not get into a pissing contest over credentials

Care to elaborate? Nothing is technically wrong with what he said

Unfathomably based

Disney doesn’t agree

Now I’m pretty sure they won’t see a conflict of interest in not using their IP to train AI but advocating for your data for training.

The inverse is also true. Disney will make their own AI regardless of being able to use anyone else’s data for training. Because they have a ton of data already. The only ones that will be shafted if that freedom is restricted is that those without a library of data to train will have no access to AI.

So long as it’s correctly attributed it’s no more immoral than what a corpo inherently does.

I interpreted this less as being about art models and more about predictive models trained off of data with racial prejudice (i.e. crime prediction models trained off of old police data)

You’re putting your stuff out there so some cryptobro cracker can steal it, claim credit for it, and then call you a peasant for helping him make it.

It’s sort of an accelerationistic take on broken IP law I’m sure of it.

One thing I’ve started to think about for some reason is the problem of using AI to detect child porn. In order to create such a model, you need actual child porn to train it on, which raises a lot of ethical questions.

Cloudflare says they trained a model on non-cp first and worked with the government to train on data that no human eyes see.

It’s concerning there’s just a cache of cp existing on a government server, but it is for identifying and tracking down victims and assailants, so the area could not be more grey. It is the greyest grey that exists. It is more grey than #808080.

well, many governments had no issue taking over a cp website and hosting it for montha to come, using it as a honeypot. Still they hosted and distributed cp, possibly to thousands of unknown customers who can redistribute it.

I’m pretty sure those AI models are trained on hashes of the material, not the material directly, so all you need to do is save a hash of the offending material in the database any time that type of material is seized

That wouldn’t be ai though? That would just be looking up hashes.

You’re almost there…

Nah, flipping the image would completely bypass a simple hash map

From my very limited understanding it’s some special hash function that’s still irreversible but correlates more closely with the material in question, so an AI trained on those hashes would be able to detect similar images because they’d have similar hashes, I think

Perceptual hashes, I think they’re called

could you provide a source for this? that spunds very counterintuive and bad for the hash functions. especially as the whole point of AI training in this case is detecting new images. And say a small boy at the beach wearing speedos has a lot of similiarity to a naked boy. So looking by some resemblance in the hash function would require the hashes to practically be reversible.

Not all hashes are for security. They’re called perceptual hashes

Probably a case of definitional drift of the word, because it probably should be just for the security kind.

I’m no expert, but we use those kind of hashes at my company to detect fraudulent software Here’s a Wikipedia link: https://en.m.wikipedia.org/wiki/Locality-sensitive_hashing

You absolutely do not real CSAM in the dataset for an AI to detect it.

It’s pretty genius actually: just like you can make the AI create an image with prompts, you can get prompts from an existing image.

An AI detecting CSAM would have to be trained on nudity and on children separately. If an image-to-prompts conversion results in “children” AND “nudity”, it is very likely the image was of a naked child.

This has a high false positive rate, because non-sexual nude images of children, which quite a few parents have (like images of their child bathing) would be flagged by this AI. However, the false negative rate is incredibly low.

It therefore suffices for an upload filter for social media but not for reporting to law enforcement.

This dude isn’t even whining about the false positives, they’re complaining that it would require a repository of CP to train the model. Which yes, some are certainly being trained with the real deal. But with law enforcement and tech companies already having massive amounts of CP for legal reasons, why the fuck is there even an issue with having an AI do something with it? We already have to train mods on what CP looks like, there is no reason its more moral to put a human through this than a machine.

This is a stupid comment trying to hide as philosophical. If your website is based in the US (like 80 percent of the internet is), you are REQUIRED to keep any CSAM uploaded to your website and report it. Otherwise, you’re deleting evidence. So all these websites ALREADY HAVE giant databases of child porn. We learned this when Lemmy was getting overran with CP and DB0 made a tool to find it. This is essentially just using shit any legally operating website would already have around the office, and having a computer handle it instead of a human who could be traumatized or turned on by the material. Are websites better for just keeping a database of CP and doing nothing but reporting it to cops who do nothing? This isn’t even getting into how moderators that look for CP STILL HAVE TO BE TRAINED TO DO IT!

Yeah, a real fuckin moral quandary there, I bet this is the question that killed Kant.

deleted by creator

Never forget: businesses do not own data about you. The data belongs to the data subject, businesses merely claim a licence to use it.

Legally, businesses very much own the data about you unfortunately.

No, they very explicitly don’t. They claim a licence in perpetuity to nearly all the same rights as the data owner, but the data subject is still the owner.

Also, that licence may not be so robust. A judge should see that the website has no obligation to continue hosting the website, and they offer nothing in return for the data, so the perpetual licence is not a reasonable term in the contract and should be struck down to something the data subject can rescind. In some respects we do have this with “the right to be forgotten” and to have businesses delete your data, however the enforcement of this is sorely lacking.

Laws change over time, though. Everyone is the victim of this practice, so eventually the law should catch up.

What do you think owning is? If I pull your strings, you’re my puppet. Ownership is control.

not necessarily. Your landlord is the owner of your flat, but he ceedes all rights to control over it to you, within the limits of the contract and can only take back co trol if he has evidence of you violating the contract.

Ironically your metaphor works really well as an example of how that’s not true - just because you’re allowed to pull the strings, doesn’t mean you’re the owner of the puppet

You know, that’s kinda fair. That applies pretty well for media licensing in particular.

For Stable Diffusion, it comes from images on Common Crawl through the LAION 5b dataset.

Jesus Christ who called in the tech bro cavalry? Get a fucking life losers you’re not artists and nobody is proud of you for doing the artistic equivalent of commissioning an artist (which you should be doing instead of stealing their art and mashing it into a shitty approximation of art)

It’s like photography. Photography + photoshop for some workflows. There’s a low barrier to entry.

Would you say the same thing to someone proud of how their tracing came out?

These are not comparable to AI image generation.

Even tracing has more artistic input than typing “artist name cool thing I like lighting trending on artstation” into a text box.

So about the same as a photograph then

Removed by mod

Aside from the fact that your comment applies to photography as well, I think it’s fair to point out image generation can also be a complex pipeline instead of a simple prompt.

I use ComfyUI on my own hardware and frequently include steps for control net, depth maps, canny edge detection, segmentation, loras, and more. The text prompts, both positive and negative, are the least important parts in my workflow personally.

Hell sometimes I use my own photos as one of the dozens of inputs for the workflow, so in a sense photography was included.

Annoying and aggressive about it to the point where you’d like to wring their necks? Yeah, that’s exactly what that’s like.

it’s funny, but still, where did you get the data? XD

From Reddit comments.

Content platforms could start to poison their data with random AI generated garbage

All damn time as a project committee.