Using only the power of a small star, we were able to crash Windows in record time.

Vegeta, what’s his MHz level?

Is one of the records heat-related? 🫠

Nuclear fusion won’t happen at room temperatures. You gotta give some to take some.

Damn I just saw the headline on another app and came over here to make a fusion joke

All that, and Electron apps are still sluggish.

Hardware can’t fix what’s broken in software.

Only -231 degrees required, nice.

Is clock speed a thing anymore rather than cores?

As far as I know, clock speed is still pretty nice to have, but chip development has shifted towards adding multiple cores because it basically became technologically impossible to continue increasing clock speeds.

A nice fun fact: if you consider how fast electricity travels in silicium, it turns out that for a clock that pulses in the tens of billions of times per second (which is what gigahertz are), it is physically impossible for each pulse to get all the way across a 2cm die before the next pulse starts. This is exacerbated by the fact that a processor has many meandering paths throughout and is not a straight line.

So at any given moment, there are several clock cycles traveling throughout a modern processor at the same time, and the designers have to just “come up” with a solution that makes that work, nevermind the fact that almost all the digital logic design tools are not built to account for this, so instead they end up having to use analog (as in audio chips, not as in pen-and-paper design) design tools.

Signals don’t have to make it across the whole die each clock pulse. They just have to make it to the next register in their pipeline/data path, and timing tools for that absolutely exist. They treat it as analog because the signals themselves are analog and chips must account for things like the time it takes for a signal to go from a 0 to a 1 (or vice versa), as well as the time it takes to “charge” a flip flop so that it registers the signal change and holds it stable for the next stage of the pipeline.

Depends on what you are doing.

Gaming you want speed.

rendering, you want cores.

as a typical rule of thumb, since games will always be limited to the number of threads they use, and rendering/compiling/etc typically uses everything it can get.

And CPUs with higher core counts tend to have lower clock speeds per core, leading to games sometimes running much better on mid-range hardware than on the latest and greatest.

Yeah i forgot to mention that. thanks for picking up my slack.

More cores > more heat > less speed per core to manage the heat.

Less cores > less overall heat > more speed per core.

Which is why, generally, a 5600 is better for gaming than a 5950.

the 3d cache chips throw a minor wrench into things though, as the extra and faster cache can help compensate for lower speeds, which makes the 5800x3d generally a better gaming chip than the 5600, despite lower speeds.

Games are optimized for multiple cores to a much higher degree than they used to. Single core games are uncommon, even on the indie scene.

They were held back for a long time by console hardware, but that’s not a problem anymore.

Would be interestng what in a superconducting (-271°C?) CPU happens. At least leakage due to tunneling effects should be reduced at -231°C.

deleted by creator

Thank fuck it wasnt kelvin!

deleted by creator

Was one of the records “Largest Dwarf Fortress Fort with a playable frame rate”?

There’s multithreading mods for almost every mod-able game, be it Satisfactory, Rimworld or Oxygen not Included. For Dwarf Fortress not?

You can mod almost everything in Dwarf Fortress, down to the shear strength of a single beard hair - but you can’t mod the threading :)

nowadays dwarf fortress has built in multithreading (and the combination of other optimizations and the progress in CPU power has made it a fairly well-performing game overall)

You can finally open a “Hello world” in Rust.

alas, we’re still a couple of decaes a way from being fast enough to compile it in the first place

Open it, not run ti

This may excite some, but I value sustained real-world performance more. For example, the fast processors tested by Gamers nexus.

“You might enjoy F1 racing, but I value fuel-efficient commuters more.”

We can like both things.

The index of efficiency at LeMans is where the 2 meet for me.

I also dig the hyper milers, but don’t commute far, so haven’t bothered with my own cars.

deleted by creator

As it happens, overclocking is one of the few reasons to bother with the 14900ks.

“Hey, guys, you know how our top desktop cpu runs hot, is really expensive, and loses in most gaming benchmarks to an AMD cpu that costs $200 less? Let’s fix that by releasing one that’s 2% faster, gets even hotter, and is even more expensive.” - a daily conversation at Intel.

Wasting our precious, precious Helium

Looks like we just found more, just in time for the private sector to exploit fully…

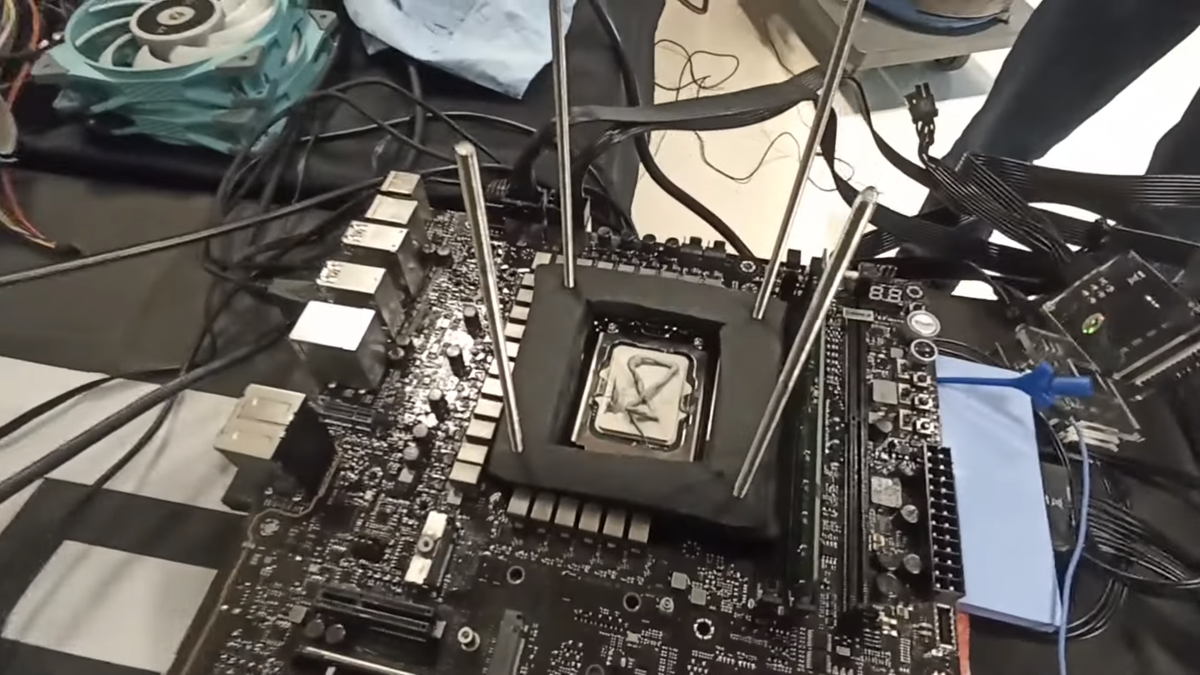

Original video: https://www.youtube.com/watch?v=qr26jxPIDm0

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=qr26jxPIDm0

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Holy shit what is the average freq for this cpu? They probably had to increment the Volatge by a LOT. I mean what technology is this 10nm? The capacitance of those devices takea big part on latency

It’s 6.2 GHz and they set the voltage to 1.85 V. Both is stated in the article. You must have missed it.

Okay thanks. Ah then there isn’t such a disparity between frequency.

I wonder when they’ll do this on LTT.

deleted by creator

Even the Ferengi would recognize this as a lost cause.

lol, Lemmy really is still a very small world, isn’t it, buddy?🖖🏻

Rule of Aquisition… eh, whatever

nah. Not enough to drop or steal.